Training Haar cascade in the cloud

In this post I’ll show you how you can train cascade classifier with OpenCV very quickly even if you have low-end hardware using virtual machine in the cloud.

Why Clouds?

Well, training a descriptor takes a lot of time. Depending on template size, number of samples and stages, it can take from several ours to a couple of days to train such cascade! Be prepared that during training stage your PC will likely be unusuable due to high RAM usage and CPU load. If you’re on laptop - it will become hot really quick. So, what if you have to train your cascade, but you don’t have either time or spare machine to do this?

Recently I’ve faced this problem in one of my personal projects. What even more funny, a 10 hours flight was approaching, but I didn’t wanted to waste this time for nothing. I only had a laptop, but this task will drain my battery for sure. So I’ve decided to use virtual server to do this.

Step 1 - Environment setup

First, I’ve created a basic droplet in DigitalOcean. Yep, for 5$/month you can have your droplet that can do much! It takes only 55 seconds to deploy a new instance (I assume you’re familiar with SSH keys, terminal, Git and so on.) and we’re ready to rock!

Step 2 - Install latest OpenCV release

There are two ways to do this: either using package managers (homebrew, yum or apt-get) or builiding it from scratch. Personally I prefer second option since you can configure OpenCV. Usually I build static libs whith apps but without tests, java, cuda, python, OpenEXR, Jasper and Tiff. Regardless of the way you choose to install OpenCV, ensure that opencv apps (opencv_createsamples, opencv_traincascade) are also installed!.

Step 3 - Prepare your train data

There are a lot of tutorials 1, 2, 3 on how to train cascade with OpenCV: which images are good for positive and negative samples and which settings should be used for cascade training. Let’s assume you have everything in a single folder on your load machine and there is a script called “train.sh” that starts training stage:

| |

Step 4 - Deploy train data to cloud

The easiest way to upload this folder to your virtual droplet is to use the rsync tool.

| |

For instance, the following command will upload the traindata/ folder with it’s content to ~haartraining.example.com~ webserver to /traindata directory. This example assumes that your public key has been added to haartraining.example.com during droplet creation.

| |

Step 5 - Start training

The easiest way to execute training is to login to remote maching using ssh and execute the train script with a simple command:

| |

However, this will require you to keep SSH-session open all the time. If you log-out, the process will terminate and training will be terminated as well. To prevent this we can use nohup UNIX utility:

| |

Training will continue to work regardless of the user’s connection to the terminal, and log results to the file train.log.

Step 6 - Getting the results

After trainign is done (you can check this by top command output or looking at the train.log), we can download trainresults back with rsync command:

| |

Step 7 - Speeding up training

To speed-up training stage I recommend to pass additional options to opencv_traincascade tool:

- precalcValBufSize=2048

- precalcIdxBufSize=2048

Ideally you want to use all available memory of your instance for these buffers, so if you have 4Gb of RAM installed, pass at least a 1Gb to each of these buffers.

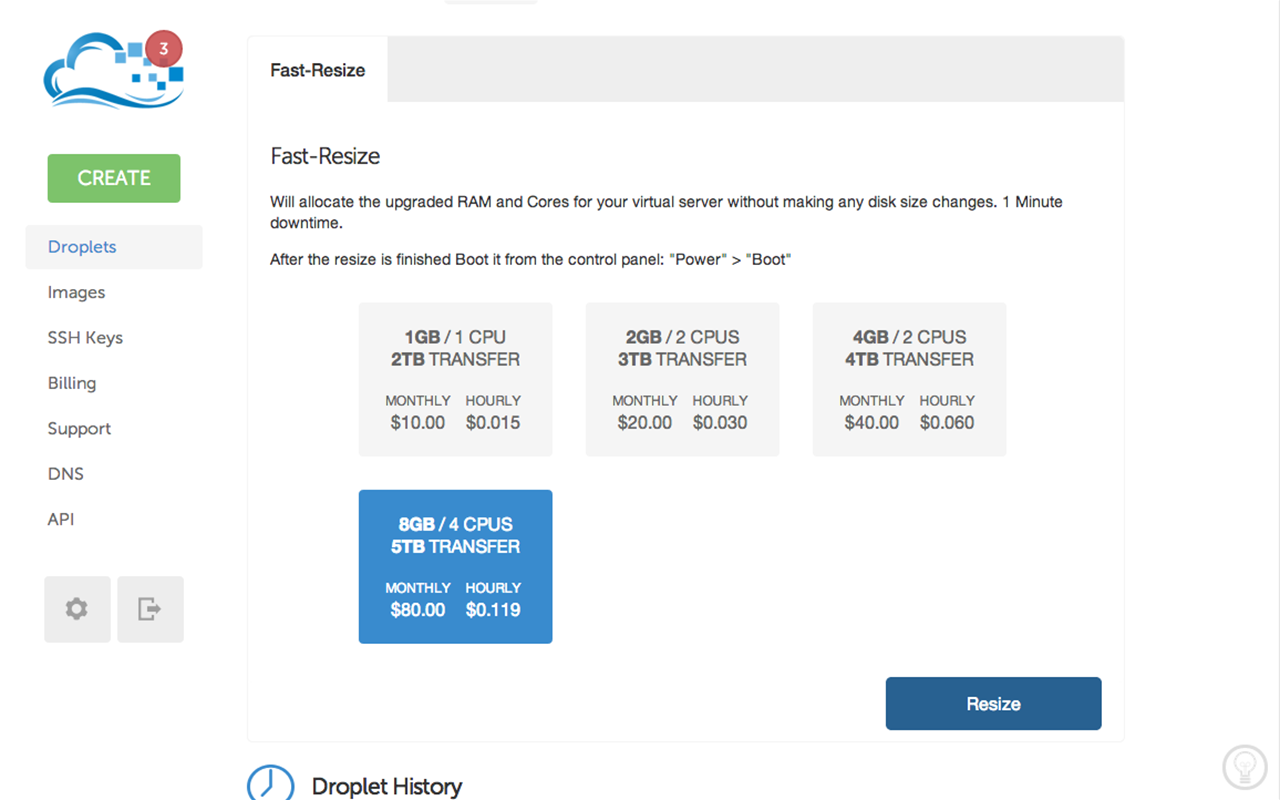

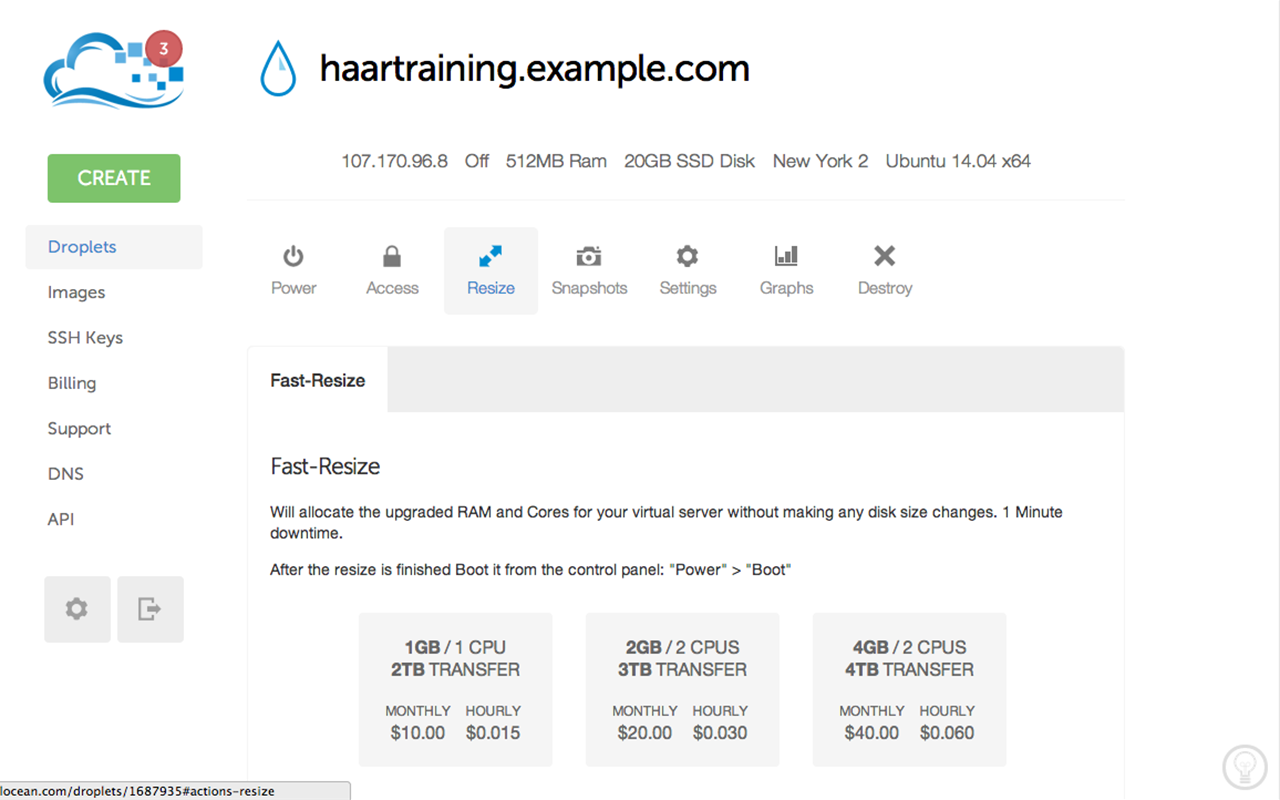

It also may be a good idea to “shrink” the DigitalOcean’s droplet to more powerful configuration which gives you 16Gb of RAM and 8 CPU’s.

This task can be done on few clicks:

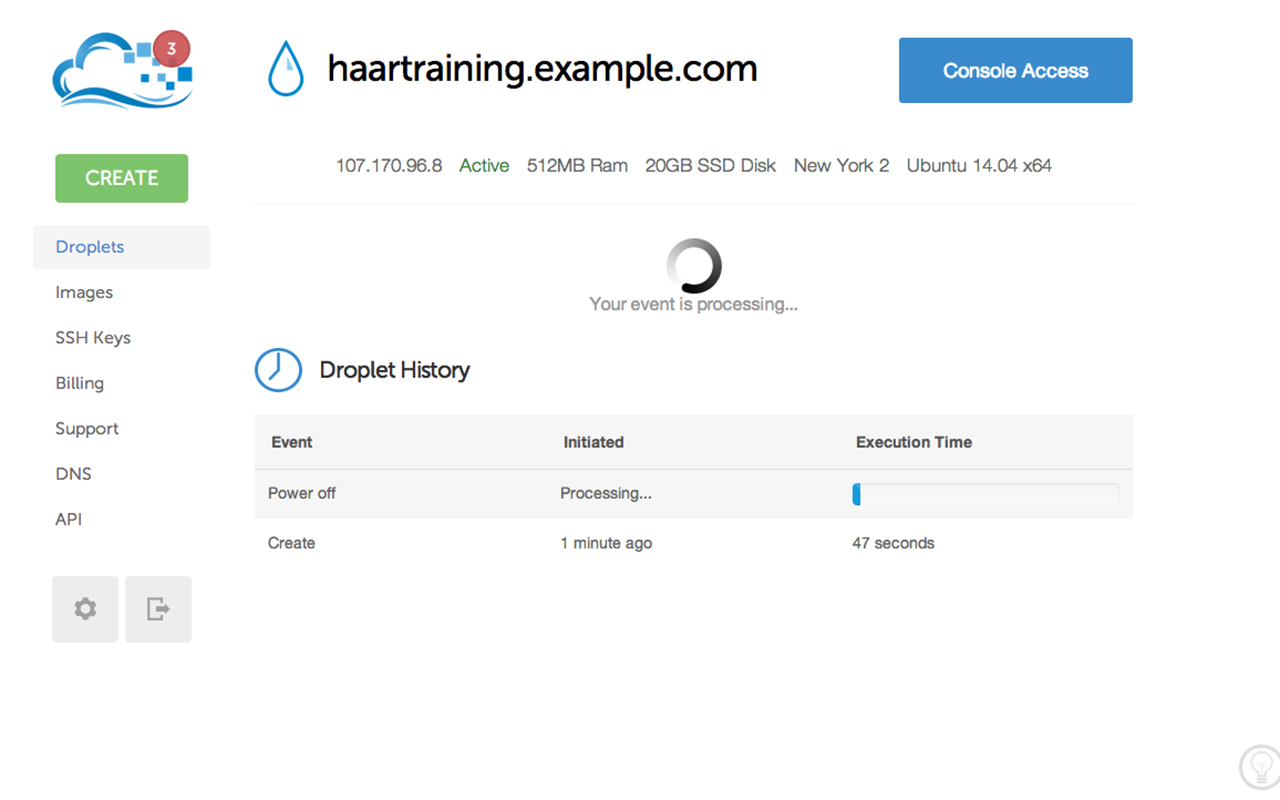

Stop your droplet

Choose “Resize” and pick a necessary configuration

Power-up your resized droplet

Please be advised, that DigitalOcean will charge you regardless whether your droplet is powered on or off. So if you’re not using it - make a snapshot of it and delete unused droplet to save money.

That’s all. I hope you enjoyed reading this post. Please, leave your comments.